Fixing broken boot from encrypted devices after upgrade to Debian Bookworm

Fixing broken boot from encrypted devices after upgrade to Debian Bookworm

My workstation uses LUKS to encrypt the underlying filesystem on two SSDs (NVMe) devices, atop of which I have lvm2 running to create a big filesystem, which worked nicely the last couple of years (more details will follow below). But after the upgrade from Debian 11 (Bullseye) to Debian 12 (Bookworm) my system refused to boot.

More concretely, I was greeted like always with the prompt to decrypt my root device, which was also working as expected — i.e. I received the “setup device successfully” message from the initramfs. However, the boot failed to mount the resume / swap device and then gave up completely with the information that it couldn’t mount the root device and the lovely message:

ALERT! /dev/mapper/pixie--vg-root does not exist.

Debug attempts

Initramfs debug shell

Searching the web let to a relevant looking thread on forums.debian.net, which gave some initial ideas. In particular, I could make some progress using these two commands:

vgchange -ay

vgchange -ay --activationmode partial

Afterwards, my /dev/mapper/ showed the expected devices. However, I couldn’t mount them, hence also couldn’t follow the further advice there.

What is clear to me now but wasn’t at the time is that I should have exited the initramfs emergency shell (via exit) and tried to continue to boot the system. This likely would have worked and allowed me to debug and fix the issue from the machine directly.

Rescue mode from USB stick

Instead, I created a USB stick with the netiso installation image for Debian 12 and booted from the stick into rescue mode (in the advanced options). This nicely detected my hardware, and then prompted me for each of my SSDs to decrypt them.

That’s when I figured out that of course both of my NVMe devices were encrypted separately and not even using the same password. I had forgotten this, but my password store contained of course both passwords, so I could decrypt both devices.

Fixing the problem

Wrong device name

I had read in a reddit thread that Debian uses a modified crypttab(5) format, adding an option initramfs to identify the root device, resume devices and others which needed handling in the initramfs phase of the boot. This option was missing, so I added this to the entries in my crypttab and tried to update the initramfs via update-initramfs -u.

Unfortunetely, this threw an error about a source mismatch for one of my devices and also gave a warning that the cipher method for decryption could not be figured out. After some while, I realized that my /etc/crypttab/ specified nvme0n1p3_crypt as the device but that it should really be nvme1n1p3_crypt (note nvme0 vs. nvme1). I’m not sure if this error had been in there all the time (and if so, why did it work before) or if something changed with Bookworm, triggering a device name change.

After correcting this error, I could finally update the initramfs and rebooted. Unfortunately, I ran into the same problem on boot.

Key file not accessible

This time while debugging in the initramfs emergency shell (and also searching for hints on the web), it became clear to me that my other device nvme0n1p1 was the actual culprit. In particular, I was using a key file (stored in /etc/keys/nvme0n1p1.luks) to decrypt this device automatically, which is also the reason I had forgotten about the two differing passwords for the devices.

So, I edited the crypttab once more to no longer reference the keyfile. So, I changed /etc/crypttab from:

nvme1n1p3_crypt UUID=[elided] none luks,initramfs

nvme0n1p1_crypt UUID=[elided] /etc/keys/nvme0n1p1.luks luks,initramfs

to the new version:

nvme1n1p3_crypt UUID=[elided] none luks,initramfs

nvme0n1p1_crypt UUID=[elided] none luks,initramfs

After another update-initramfs -u -k all, I could finally again boot into my system. Of course, I now have to give both passwords on boot.

I’m not sure if this is a recent change, but apparently now you need to specify a keyfile pattern in /etc/cryptsetup-initramfs/conf-hook. So, I now have the following in this file:

root@pixie:/etc/cryptsetup-initramfs# grep ^KEYFILE_PATTERN conf-hook

KEYFILE_PATTERN=/etc/keys/*

And then reverting the change to the crypttab above, re-running the update-initramfs makes the automatic decryption for the second device work again.

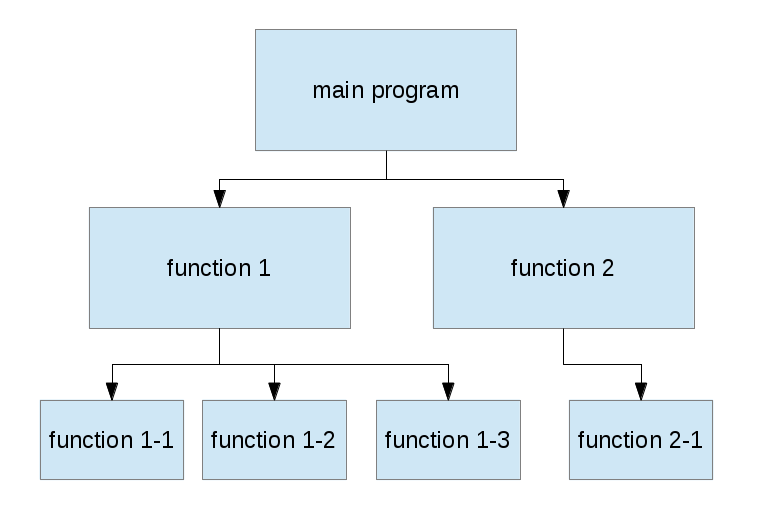

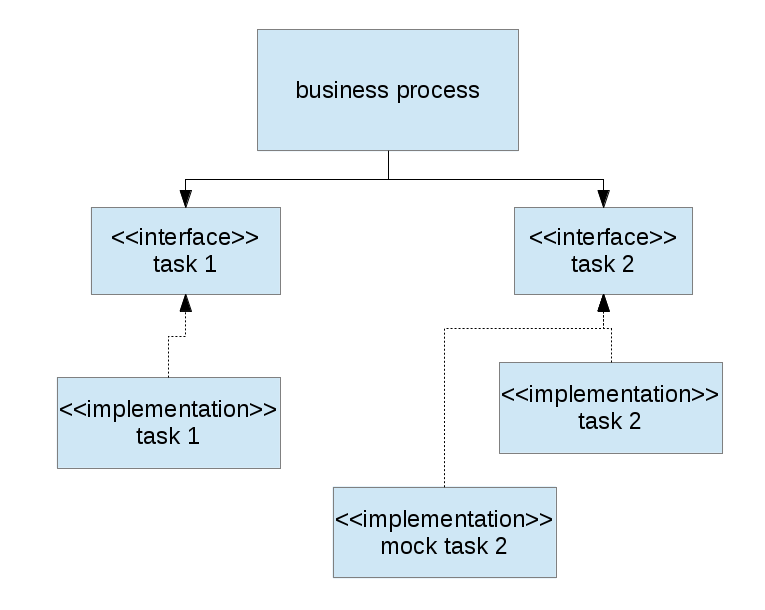

He then goes on to introduce the idea of programming against abstractions and introduces the idea of the

He then goes on to introduce the idea of programming against abstractions and introduces the idea of the  When I take a look at some of my recent Clojure code or at some older code I’ve written in Common Lisp, I immediately recognize dependencies that correspond to those in a classical procedural system. Let’s go for an example and take a look at one specific function in

When I take a look at some of my recent Clojure code or at some older code I’ve written in Common Lisp, I immediately recognize dependencies that correspond to those in a classical procedural system. Let’s go for an example and take a look at one specific function in