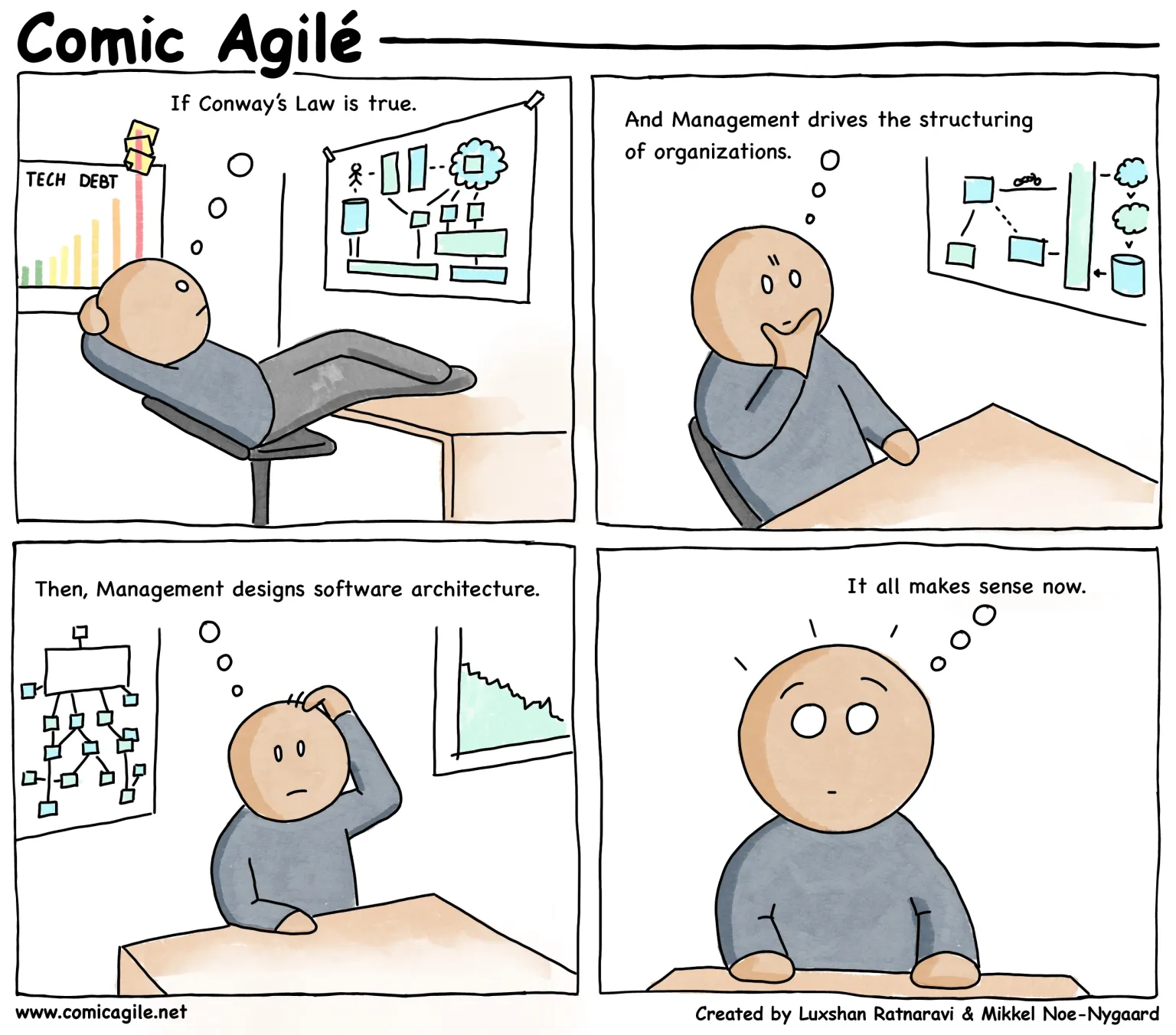

Conway's law and the role of managers

Allen Holub had an interesting (post, tweet) toot about managers deciding team structure and software architecture:

If management is setting up team structure, they are designing your architecture. They know nothing about architecture, though. Self-organizing teams are essential. (See Conway’s Law). — Allen Holub

I mean, who am I to disagree with Allen Holub, but I agree completely with his conclusion but not with this argument. It might seem a little unfair of me to react to his tiny toot with a lengthy blog post, as I’m sure Allen could talk at length about the topic with tons of good insights, so please don’t take it as a criticsm of his post but as a way to express my thinking about how I look at Conway’s law and the role of managers.

Out of the ivory tower

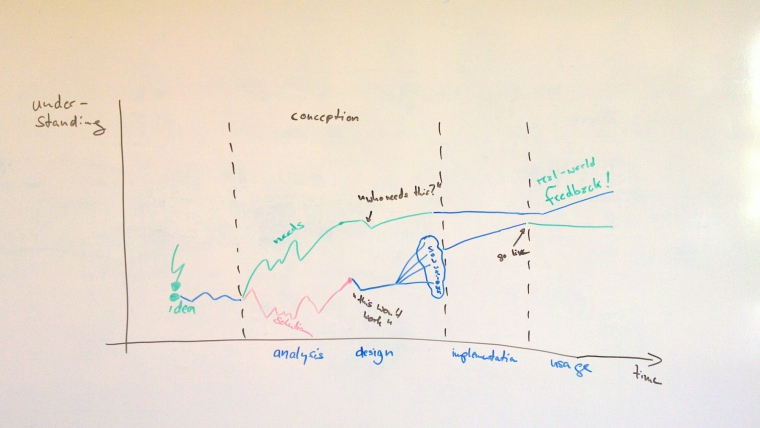

First of all, I guess it depends on who you are referring to with “management”. If Allen is talking about top level management, then I agree that they usually don’t know about your architecture. But is this also true for the middle managers close to the teams doing the work? This level of management should know about architecture in my humble opinion, to avoid making foolish mistakes and to understand what the developers are talking about.

I think managers should today know about both architecture and Conway’s law. Ideally, they should also know about the Inverse Conway Maneuver (as eg. discussed in Martin Fowler’s article on Conway’s Law) which is simply the idea that you could structure your teams according to the wanted design of your system. Assuming the managers understand the architecture of the system (both in terms of how it’s structured currently but also a future target state), I think that managers can make use of this strategy when organizing their teams.

Communication is key

Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization’s communication structure. — Melvin E. Conway

Second, I think it is worth pointing out that Conway’s Law is not about how system architecture and team structure interact, but about the influence of an organization’s communication structure. Now, organizations are often re-structured with the implicit assumption that org structure matches communication structure. But this might be a bit naive, because organizations not only have a formal organization but also an informal one (see this overview of formal vs. informal organization). The latter is quite important for how communication will actually happen in my experience. So a change in the formal organization does not necessarily mean the relevant communication channels that drove your architecture before will suddenly disappear. In other words, people will remember that Eva knows a lot about the data access layer and will include her in relevant discussions, regardless of which team she’s in.

Also, as discussed in Fowler’s article on Conway’s Law, it is questionable if Conway’s Law applies to all organization sizes. If you have two very small teams, in which communication among all people is easy and natural, there are a lot of other options than ending up with two components. Sure, if the communication between your people is complicated, you can expect to see this as friction in the architecture. So, if Fred doesn’t like working with Eva, he might be inclined to put in a completely new different data access layer. However, this might also happen if Fred and Eva are within the same group (I wouldn’t call them and their peers a team if that happens).

The Wikipedia page on Conway’s Law discusses supporting evidence that modularity of systems is directly related to how tightly coupled your organization is. So, managers shouldn’t just work on reporting lines but also on enabling good communication between the teams — while you might not be able to influence the informal structure, you can nevertheless try to set up good channels within the formal org structure. I certainly hope that you’re working in an organization which actively promotes cross-team collaboration and understands the benefit of networking, and not one in which you have to move up the org chart to find the highest shared person to convey information and to drive decisions.

End to end work

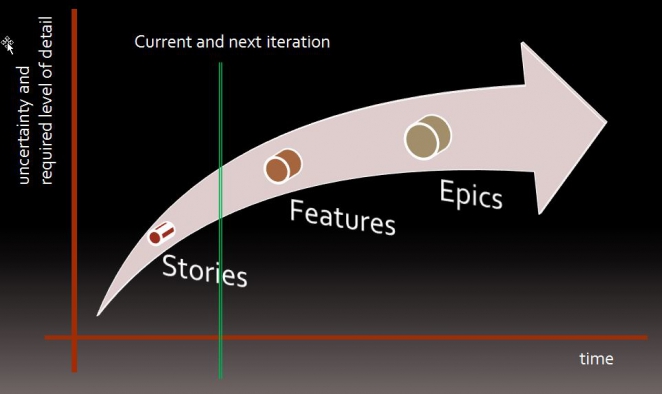

Third, managers should be aware of the idea that working in feature teams is usually better than working in component teams (cf. Roman Pichler’s more nuanced take on feature vs. component teams): the idea here is that a feature team would have all the capabilities to deliver a feature end to end, i.e. it does not depend on other teams. If your system already has a good design to support your feature development, then your cross-capability feature teams should be able to use and extend it. Naturally, if your system is big enough there might be more to know about the architecture then you can fit into the heads of your team — that’s when you’ll need again good communication channels across your organization. This will not invalidate Conway’s law but might enable that a change in the architecture isn’t just driven by one’s team decision alone.

Constant change is inevitable

Fourth, team and communication structure is a much richer and deeper topic that people have been thinking and writing about like forever and only alluding to Conway’s law ignores many other insights. E.g., team topologies is an approach that approaches an organization structure from the point of view of communication meachanisms and minimizing cognitive load of the people working within it. If you take a look at the team topologies in a nutshell you’ll see that it tries to also tackle the “pushing against Conway’s law”. If you follow the route as outlined in how to get started with team topologies you end up looking at the same fundamental pieces: the architecture and communication routes. That’s a tool that managers can use (and there are more, like Jurgen Appelo’s unfix framework).

And then you have all the good insights from Heidi Helfand that these days we’re constantly changing our team structures anyways, cf. Dynamic Reteaming. As she discusses, our teams change for so many reasons, people leaving, people joining, specific organizational needs etc. It’s only natural that a team structure will evolve over time, just like system architecture does (team topologies also expects to evolve your organization over time). It sounds a little bit over the top to me, to always pull the teams together and trigger a self-selection event in these cases (not that Alan is suggesting this). If we would reduce our thinking about team structure and reteaming to the technical or system architecture view only, we would swap one mistake (ignoring the technical implications) with another (ignoring people interactions and other needs), which would arguably be even worse.

Conclusion

So, what does leave us with? That managers should move around people like chess pieces, like they’ve always done and that’s that? No, of course not. As I said, I’m very much in agreement that self-organizing teams are essential. The people which are close to the work should have an important say in discussing the organizational structure. They can bring in the knowledge about architecture and technical dependencies, they will also bring in their preferences and personal needs and all of this is very important. But I also believe managers need to be involved, too, to clarify other needs to be considered (eg. business needs, future organization plans like hiring, etc). Plus you don’t want to burden engineers with having to handle the constant re-teaming all the time, because engineers usually don’t like it — otherwise they would have moved into management already.

Oh, and finally there’s a nice slide deck from Eberhard Wolff why he’s tired of Conway’s law which you should really click through if you’ve read this far.

The truth of this quote can’t be overstated in my experience. The more technical debt you accumulate, the slower your team will become as it struggles to understand all that crap. Also, code that is hard to understand will make it easy to introduce new bugs and each new bug means that your team will not work on new features. Bad code will also demotivate your people as they feel like Don Quixote fighting an endless battle against windmills that will constantly throw more bad code into their faces. There is also the broken-window syndrome: if people get the feeling that everything looks awful and is broken already, they can get the feeling that it is alright to put in even more spaghetti code.

The truth of this quote can’t be overstated in my experience. The more technical debt you accumulate, the slower your team will become as it struggles to understand all that crap. Also, code that is hard to understand will make it easy to introduce new bugs and each new bug means that your team will not work on new features. Bad code will also demotivate your people as they feel like Don Quixote fighting an endless battle against windmills that will constantly throw more bad code into their faces. There is also the broken-window syndrome: if people get the feeling that everything looks awful and is broken already, they can get the feeling that it is alright to put in even more spaghetti code.