Moving targets -- on agile conception

Two things are clear for every project and every product: there is always a start and an end. I’ll ignore the end for now and would like to take a closer look at the start. One has to wonder: where do all these projects or new products come from? Obviously, somebody finds out at one point that there is a need for something and this something is the nucleus of the perspective development. If this idea requires significant effort, it’s typically necessary to convince the people responsible for the money that is worthwhile to pursue the idea. Let’s take a closer look at what this something actually is and how it changes and needs to change before the developers can finally go make it real.

To use a more descriptive tag, I will use the term feature idea for now, although we could well talk about ideas for a new product or product line. Feature ideas in a very early stage are rarely more than a single, simple statement or even just a few descriptive keywords like “I would like a button here where I can save my settings” or “remember me functionality”. Of course, whoever came up with the idea has typically second thoughts, more or less fuzzy but these are not communicated, neither discussed nor finalized.

Quite often these second thoughts are related to some existing systems or solutions, even if it’s just to describe what the respective solutions do not provide (note that these solutions might not be your own). Typically feature ideas are about some specific expected behavior of some system and basically boil down to what needs to be build. This is all good: feature ideas don’t need further elaboration or lengthy descriptions in an early state.

How do you proceed from here to the nitty-gritty details that finally need to be done? In traditional settings, to get from the idea for a larger feature to development you need to jump over the budget hurdle, which usually requires detailed analysis results, including business cases, return on investment calculations, and implementation plans. The latter consist of solution outline, milestones, resource plans and cost requirements. It is important to note that to come up with all this substantial efforts need to be invested already. The bigger the need for precise information, the bigger the required investment. And then at some point you hopefully get the okay to move ahead. But in case the idea is turned down, all this investment in detailed plans was just wasted. In agile setups, we would like to avoid that waste. Still, of course, the need for getting a decision on where the business wants to invest its money does not go away. But in order to do so, does the senior management really need all those details? They don’t. There is a need for accuracy, not for precision (cf. for a description of the difference). Asking for all the details is a sure sign for lack of trust, but I have never seen any senior management trying to verify that all those precise details were actually accurate, which probably explains why the demand for documents and spreadsheets is all there is. This is then typically combined with taking all plans as promises that need to be kept and not as means to enable successful investments (command-and-control vs. plan-to-replan).

What is surely needed boils down to just one thing: understanding the value of an investment. It’s often much easier to determine what you want or can invest than to precisely estimate upfront what you need to invest (cf. this discussion of the no estimates idea which shows the wonderful relevant Dilbert strip on the same issue). On top-level, investment is essentially tied to strategy: e.g. in the Scaled Agile Framework (SAFe, cf. Leffingwell et al.), investment themes determine the relation between budget (resources) and the program portfolio of business epics. This essentially requires an understanding how each possible business epic or feature idea contributes to the overall company strategy (this is basically applying the Lean principle of “optimize the whole” to feature ideas).

We essentially danced around the big elephant in the room now: feature ideas often describe expected behavior, but we need to know about the value of an idea. But if all we have is a simple statement (and not the full-fledged analysis we would like to avoid) of some idea, how can we know its value? The essential insight is that it might not be necessary to understand the precise value yet. The earlier in the process we are, the less is it necessary to perform lengthy and detailed analysis: determining the value of some idea is only a means to further a decision on where to invest further. However, at least it should be possible to judge the value of one feature idea in relation or better said in comparison to other ideas, as Johanna Rothman discusses in her article on why cost is the wrong question for evaluation projects: at least in an early stage, it might be enough to use a very simple kind of rough value sizing, e.g. putting an “M” on one feature idea in comparison to a “L” for another. It is important to recognize that the value of ideas will change over time: in particular so when looked at in relation to other things your business needs to think about, as new ideas come up , some ideas get implemented and other discarded. Accounting for these changes over time is why planning to replan is important in agile methodologies. An important concept in agile methodologies that helps making this clear is the cost of delay: the idea is that you try to understand or predict how the value contribution of some feature will change over time, in particular what impact not pursuing the idea will have. This is most useful for prioritizing by cost of delay of what to invest in — obviously, as CoD is all about loss of value over time, as time moves on you want to reconsider your priorities (where you want to drill down further with an analysis etc.). (An alternative coming from the lean camp is to use an impact-effort matrix, but such a matrix needs an estimate about the effort required and also treats value as an absolute over time, which it usually isn’t. Cf. this article about cost of delay vs. WSJF in SAFe for a discussion why cost of delay and value considerations are not the same).

Inevitably, when you try to think about the value of some feature idea, you will think about what this simple statements like “remember me functionality” should actually mean. Very often the way in which we name or describe a potential feature is often a confusion (and at best a conflation) of problem and solution space and that can make it quite difficult to judge it’s value. As such, naive feature descriptions can quickly become a big impediment. For one thing, they are — due to being explicitly simple — usually ambiguous and / or often hard to understand. Does “remember functionality” describe that I stay logged in on the website or does it mean that the website remembers the visitor and shows specific ads related to his interests or both? This is often the reason why some people attribute completely different value to an idea. Worse, they typically don’t give any clue about the need behind the wanted behavior nor about the value this behavior brings. Just as bad is that simple statements of the feature idea in terms of expected behaviour (“I want a button that …”) limits creativity by already pointing in the direction of a possible solution: maybe there are other options which are much better suited, both from a usability point of view but also with regard to the required functionality. So the conflation of problem and solution is actually problematic for understanding the problem and for designing the solution.

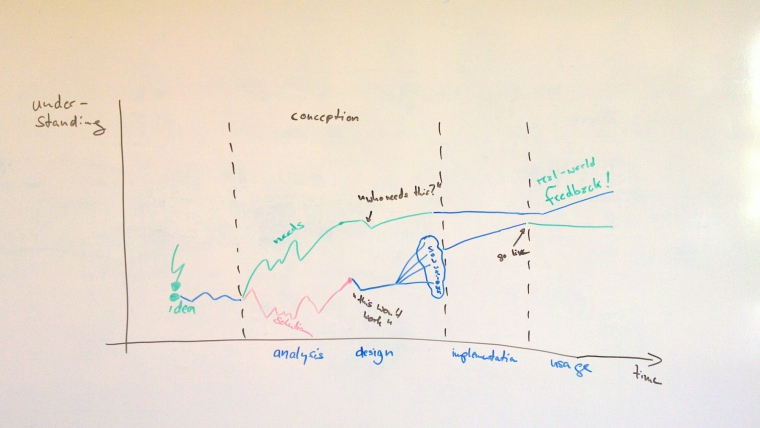

So what is the process to move from a feature idea to the solution design? I think it’s useful to see feature idea statements as promises for analysis. The analysis of a feature idea should essentially focus on understanding the user and / or business needs the idea is related to. The stereotypical user story template “As a <type of persona>, I want to <perform X> in order <to achive Y>” is a useful tool in this respect as it is explicitly constructed to include and name the need (cf. Gojko Adzic rant Writing “As a user” does not make it a user story how this can go wrong when taken on too easy). Now you might not have such a story or might not have it yet and maybe it’s not clear what this simple feature idea is about. That’s the time to engage with whoever seems able to contribute, but first and foremost it should probably be the primary beneficiaries of the feature idea. Traditional requirements engineering practice would suggest to involve all relevant stakeholders (perspective users, management, marketing, legal department, …) and to employ whatever RE tool seems reasonable to use (e.g. brainstorming, workshops, open interviews, etc.). In agile setups, determining who actually is a stakeholder which needs to be heard is the responsibility of the product owner. I find that it is usually also a good idea to involve the team, because they will typically have a good understanding of the solution space and should also know about the envisioned user needs. Don’t take it too far, though: If you are still trying to analyze in order to understand the value of some idea, i.e. you are still at an early stage (inception phase as the disciplined agile delivery folks would have it) of your planned feature, you should probably try to keep it light-weight.

You will likely get a lot of input on your feature idea and many in the form of comparable simple statements to what you originally started out with. Understanding the needs requires going beyond the level of simple statements or simple suggestions of simple solutions (Laura Klein has a nice description of how difficult that can be: Sometimes users will tell you that they want a toaster in their car, when what they really mean is that they don’t have time to make breakfast in the morning.). And although the famous 5 Whys might come across somewhat strange or invasive in the course of an interview, it might take that many attempts to actually understand the problem that needs to be solved. If you’re lucky, a potential user will offer some simple insight as to the problem with simple statements such as “I would like a button here so I can save my settings, so I don’t have to adjust them again and again”. Further analysis might reveal that the real problem is that there is a usability problem and the user forgets to adjust the settings too often or that the user is a regular user which would like to spend less time with repetitive tasks. The main point here is that this process is about understanding the needs, which typically requires challenging any superficial and obvious ideas: this is both about maximizing the amount of work not done (simplicity) and about building solutions that satisfy the user.

This is now where the team basically takes over: the team is responsible for working out the solution, not only constructing / implementing it, but essentially designing the solution. This is not to say that the product owner or other stakeholders cannot or should not contribute ideas on how to solve it, quite to the contrary. It is quite essential to have stakeholders around to enable the knowledge transfer of needs from the users or stakeholders to the team. So while the separate paragraphs of this text might suggest a handover from product owner to development team, from needs to solutions, ideally this should be a smooth transition with lots of participation of the entire team and face-to-face communication. Tools like Roman Pichler’s product canvas are useful, but there is a reason why the agile manifesto recommends people interactions over tool usage. Again, for working out the solutions many ideas and tools can and should be exploited, from brainstorming over UI scribbles to models and prototypes. Typically when designing the solution, many alternatives come to mind and different people from different backgrounds will have different opinions and favorites. This is actually good, because you can embrace this diversity to think about the best possible solution. However, the best solution might not actually be the solution you want to implement, often the simplest thing that could possibly work is a reasonable way to move forward: this minimizes the effort required, thereby enabling faster time to market and thereby enabling earlier feedback on whether this solution actually works (this is the tension between trying to build the best thing vs. learning fast from feedback about what actually proves to work). People with lots of experience in designing solutions will typically also come up with all kind of challenging questions which might require drilling further down into the needs (e.g. questioning if the feature is targeted at the right people or what the legal department has to say to some feature). This will trigger more learning about the feature under consideration. But this should not be taken as an excuse to run endless analysis or design meetings: it is often a better approach to end a discussion with a decision and move ahead and find out later whether this decision was actually flawed and needs correction. It is often a good idea to consider the risks associated with some idea: the more risk, the closer you want to consider your options. In general, when in doubt about which option to chose, think about what data would be helpful to decide: performing light-weight experiments might be useful for minimizing these risks (e.g. technical spikes or usability tests with paper-pen sketches).

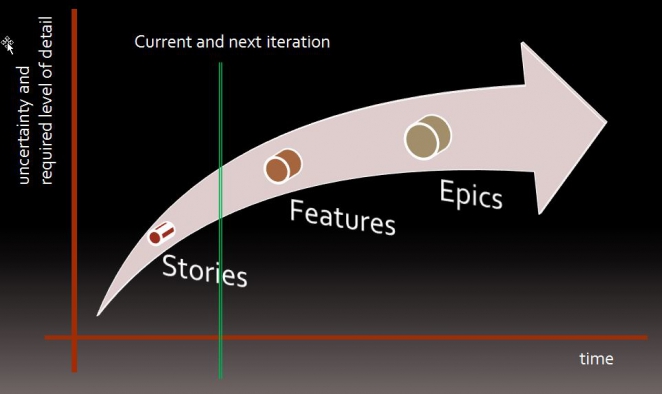

A brief terminology recap: an epic is just some requirement (feature idea) that is so “large” and / or uncertain that it needs to be broken down, i.e. nobody could take an epic and just implement it, the developers just have to come back and ask lots of questions. If you wanted to you could say after breaking down an epic, all you end up with are features but in agile circles these are usually split up into even smaller stories (cf. the image below). Of course, this is a completely arbitrary and often also highly context-dependent distinction. It’s also fairly common to use the user story template for describing epics. I used the term feature idea instead of epics to bring in some notion of time: epics start out as (feature or other) ideas. If you think about the timeline again, it’s clear that the closer you are to implementing some feature, the more details you need to know, i.e. the higher your level of understanding of the feature needs to be. And this is where the idea comes into play that you need to INVEST in your user stories, so that you can derive SMART tasks. This is obviously needed for the very next things you want to implement, and as any risk-aware tech guy or project lead will know, probably also a good idea for the next most things to do afterwards. Business cases are mainly needed when you are going to go for big investments and when it’s critical to understand the return on investment. But this brings us back to the discussion of value vs. cost and the discussion of precision vs. accuracy. Use cases, finally, are a useful tool when it comes to understanding some set of requirements in the context of a larger system. They can also be useful for driving discussions with stakeholders and are often a useful starting point if you like to model your problem / design before implementation. I see use cases as a useful tool on the feature level, to cover a broader range of more detailed stories. As such it does not matter much when to describe a use case: if it helps you drive your understanding of some problem, use it before drilling down to more fine-grained descriptions. If you already have a lot of details and you fear to lose the overview, it can also make sense to reconstruct some use case from more detailed stories.

Finally, let’s step back briefly and re-consider who is part of this solution team. It’s quite common that for feature ideas of different sizes, different people will make up the solution team. I.e. when your feature idea is concerned with some detail of some larger system, the team might just be the developer team (in the Scrum sense). For bigger features, projects or programs, it’s quite common that lead developers, design and usability specialists, solution and / or enterprise architects and maybe operational staff will play an important part in working out the solution. Whether these people are working in specialist teams (as e.g. SAFe assumes) or are part of the development teams is not of much importance, but having members of those teams working on the solution design is quite essential: if this is not the case, in the best case, the final developers will only have to rediscover and understand all the good reasons why you came up with the solution in the first place, in order to get it right. Worst, you might end up with frustrated developers which don’t take responsibility and don’t care about the build solution, because they feel they have no saying in its design.